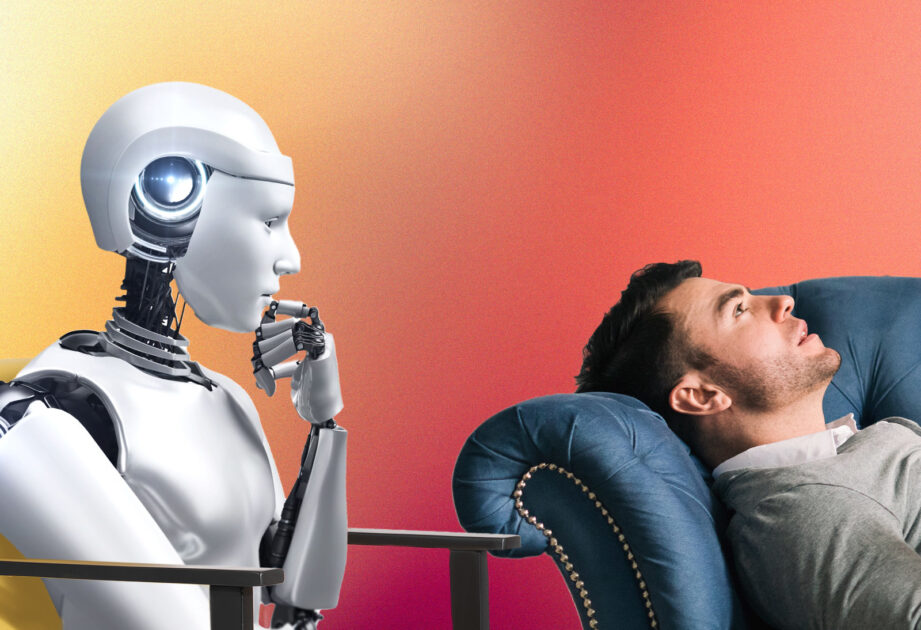

AI mental health bots often violate ethical norms, prompting calls for stronger oversight.

As increasing numbers of people seek mental health support from ChatGPT and other large language models (LLMs), new research has found that these systems, despite being instructed to follow evidence-based therapeutic methods, often fail to meet ethical standards set by organizations such as the American Psychological Association.

The study, led by computer scientists with mental health professionals, revealed that LLM-based chatbots can commit multiple ethical breaches. These include mishandling crisis situations, offering misleading feedback that reinforces harmful self-perceptions, and generating an illusion of empathy that lacks genuine understanding.

“In this work, we present a practitioner-informed framework of 15 ethical risks to demonstrate how LLM counselors violate ethical standards in mental health practice by mapping the model’s behavior to specific ethical violations,” the researchers wrote in their study. “We call on future work to create ethical, educational, and legal standards for LLM counselors — standards that are reflective of the quality and rigor of care required for human-facilitated psychotherapy.”

The research was recently presented at the AAAI/ACM Conference on Artificial Intelligence, Ethics and Society. Members of the research team are affiliated with Brown’s Center for Technological Responsibility, Reimagination and Redesign.